Google Bids To Become AI Control Plane For U.S. National Labs

Google is partnering with the U.S. Department of Energy’s National Labs under the White House Genesis Mission to provide “Gemini for Government” and related AI tools via Google Cloud’s public sector stack. All 17 National Labs get an accelerated access program to Gemini-based “AI co‑scientist” and other agentic tools, delivered under existing GSA OneGov terms.

My Analysis:

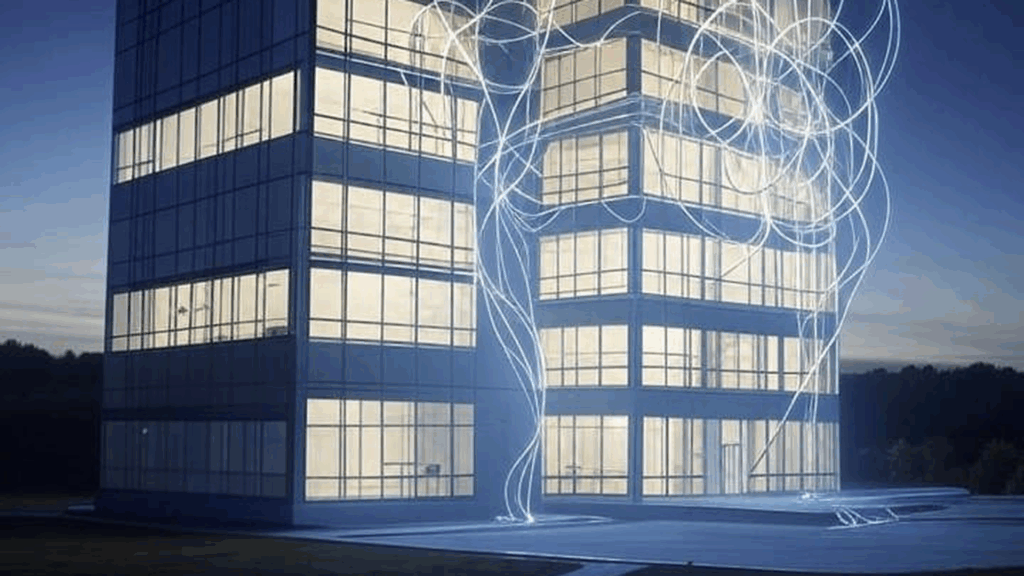

This is Google trying to anchor itself as the AI orchestration layer for U.S. federal science infrastructure. The interesting part is not the model branding, it is the positioning: Gemini for Government as a secure, accredited, federated AI fabric that sits on top of DOE’s existing HPC, experimental facilities, and data repositories.

Read between the lines. The National Labs already operate massive on‑prem HPC clusters and bespoke facilities. Those are built around CPUs, GPUs, custom interconnects, and deep integration with instruments. Google is not replacing that. It is offering a cloud AI “brain” to sit above, coordinate workflows, and mine data across labs. That is a classic neocloud move: become the AI control plane for specialized infrastructure you do not fully own.

From an infrastructure lens, a few signals stand out:

GPUs and accelerators: The work they describe, like multi‑agent “AI co‑scientist,” deep literature mining, and orchestration of simulation ensembles, is GPU hungry. If DOE workloads start leaning on Gemini in Google Cloud for front‑end intelligence, that shifts more federal GPU spend toward Google’s data centers instead of solely on‑prem or other hyperscalers. This is a quiet land grab for AI training and inference cycles attached to federal science.

Hybrid and data gravity: They explicitly talk about orchestrating analysis pipelines across hybrid cloud resources. That implies data stays split: experimental and simulation data in DOE facilities and HPC centers, AI reasoning layers in Google. The technical challenge will be bandwidth, latency, and data egress rules between secure government networks and commercial cloud. Anyone designing future DOE facilities will need to plan more east‑west bandwidth to public cloud, not just within on‑prem clusters.

Sovereign AI and control: This is not full “sovereign AI” in the strict sense. The models are still Google’s, in Google facilities, under Google’s operational control, but wrapped in government‑accredited interfaces and fed with DOE data. What we are really seeing is “sovereign workflow, foreign model.” Policy fights down the road will center on: Where do models train and run? Who can inspect weights? How is export control enforced if the same stack is used internationally?

Neocloud vs public cloud: Gemini for Government is effectively a vertical neocloud inside a big public cloud. Same physical data centers and GPUs, but a special regime: accreditation, contracts, compliance, and domain‑specific tooling for scientific research. It mirrors what aerospace, healthcare, and finance are doing. Expect more “X for Government” stacks that share hardware with the public cloud but behave like separate constellations from a policy and control perspective.

Vendor lock‑in and ecosystem dynamics: Having AI agents orchestrate scientific workflows, manage simulations, and stitch together datasets creates deep coupling to Google’s APIs, not just its GPUs. Once a multi‑lab research program builds its pipelines around Gemini agents, switching to another vendor is non‑trivial. This is Google competing head‑on with Microsoft’s strong federal and research presence and pre‑empting specialized neoclouds that might offer DOE‑specific AI stacks.

AI data center impact: This move means more steady, high‑value, long‑running workloads in Google’s public sector regions. That affects how and where Google builds capacity. Federal workloads tend to demand specific geographies, redundancy tiers, and compliance envelopes. Expect higher GPU density, stricter security segmentation, and possibly dedicated “Gov AI” clusters. On the facilities side, that means more power and cooling allocated to mission‑critical, long‑lived research tenants, not just elastic consumer AI use.

Operational reality for labs: Labs will not rip and replace their existing clusters. They will bolt Gemini on as an overlay to assist with literature synthesis, experiment planning, and data exploration. The real test is integration: can Gemini workflows talk cleanly to Slurm queues, storage hierarchies, and instrument control systems without breaking export control, classification rules, or data residency policies?

The Big Picture:

Zooming out, this fits several macro trends.

Sovereign AI: Governments want AI for science and defense, but they do not want to fully depend on consumer cloud environments. This is a halfway step. Accredited, fenced‑off access to a commercial frontier model, anchored in U.S. jurisdiction and wrapped in federal contracting. Expect ongoing pressure from policymakers to push more of this stack into government‑controlled data centers or at least into special “gov‑only” regions with stronger sovereignty guarantees.

Neocloud vs public cloud: “Gemini for Government” is neocloud behavior inside a hyperscaler. Highly specialized, domain‑specific AI services for a narrow but important vertical, with separate go‑to‑market, contracts, and compliance. As AI workloads fragment, we will see more of these carved‑out constellations: defense AI clouds, healthcare AI clouds, scientific AI clouds. Each with different rules around data, export, and model usage.

AI data center construction surge: Federal AI programs are another demand vector on an already stressed GPU and power supply chain. When National Labs adopt cloud AI for research orchestration, they add relatively predictable, long‑duration workloads. That bolsters the business case for Google to build more high‑density AI capacity in or near key U.S. government regions. Local communities will increasingly see “federal‑oriented” AI data center projects, bringing NIMBY vs YIMBY politics into the AI + government space, especially around power and water.

GPU availability and supply chain: Frontier models for federal science require top‑tier accelerators and networking. Every government AI program that lands with a hyperscaler reinforces the current supply chain hierarchy: Nvidia and a short list of OEMs at the top, then the hyperscalers, then everyone else. Smaller research institutions and independent neoclouds will find it harder to compete for the same hardware when government AI demand is routed through big clouds with priority access.

Enterprise AI adoption patterns: The same pattern we see here will mirror into large enterprises. Central AI platforms that sit above existing specialized infrastructure, orchestrating workflows across on‑prem systems and public cloud AI services. That leads to an architecture where the “AI brain” lives in a vendor cloud, and the “AI body” (data, instruments, proprietary systems) stays closer to home. It simplifies adoption but tightens dependency on a small set of cloud AI providers.

Vendor ecosystem dynamics: This is another proof point that AI competition is not just about raw model quality. It is about vertical platforms, compliance envelopes, and deep integration into existing mission workflows. Google is making a strong move into U.S. federal science just as others push “sovereign AI” clouds in Europe and Asia. Expect more alliances between hyperscalers and national research agencies, as each side tries to lock in hardware, models, and funding.

Signal Strength: High

Source: Accelerating the Genesis Mission with Gemini for Government | Google Cloud Blog