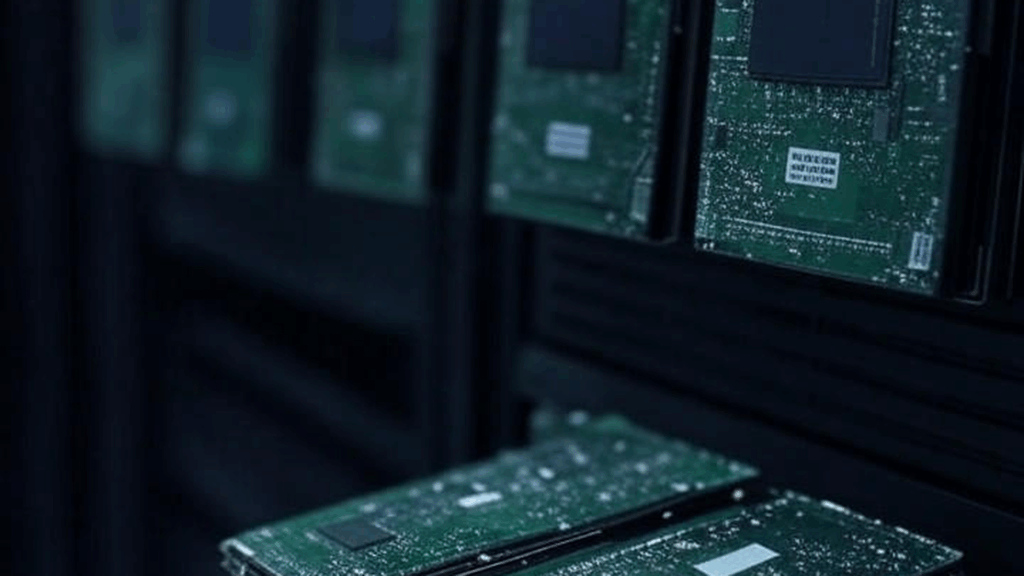

AlphaTON is getting an early tranche of NVIDIA B300 GPUs in Supermicro HGX liquid‑cooled systems via Atlantic AI, aimed at powering its Cocoon AI network tied to Telegram’s billion‑user footprint.

The move is less about raw scale and more about positioning: they are signaling “privacy‑first, decentralized” AI infra while still leaning on mainstream vendors like NVIDIA and Supermicro.

Liquid cooling and “energy‑efficient” design matter here because they are pairing this with a 2.2 MW data center deal at atNorth in Sweden, which suggests a relatively modest but high‑density, GPU‑heavy footprint.

Operationally, the dependence on partners (Atlantic AI, atNorth, Supermicro) means AlphaTON is an integrator and allocator of GPU capacity rather than a vertically integrated infra player.

For AI workloads, B300 HGX plus atNorth’s Nordic power profile could keep opex and thermal constraints reasonable, but the release is light on actual cluster size, networking topology, and tenancy model.

The real signal is that Telegram/TON ecosystem AI will sit on conventional NVIDIA HGX stacks, not custom silicon or on‑device edge, which makes resource contention and GPU market dynamics a direct risk factor.

The link is worth a read to track how fast they scale beyond this first B300 allocation and how much real capacity ends up behind their privacy‑centric marketing.

Source: AlphaTON Capital Secures First NVIDIA B300 Chips with