Microsoft positions Azure as the default runway for NVIDIA Rubin-scale AI

Microsoft announced that Azure data centers are already engineered to deploy NVIDIA’s next‑gen Vera Rubin NVL72 systems at large scale, claiming they can slot the new racks into existing “AI superfactories” with minimal rework. The blog frames this as the result of multi‑year co‑design with NVIDIA across power, cooling, networking, memory, and rack‑scale architecture.

My Analysis:

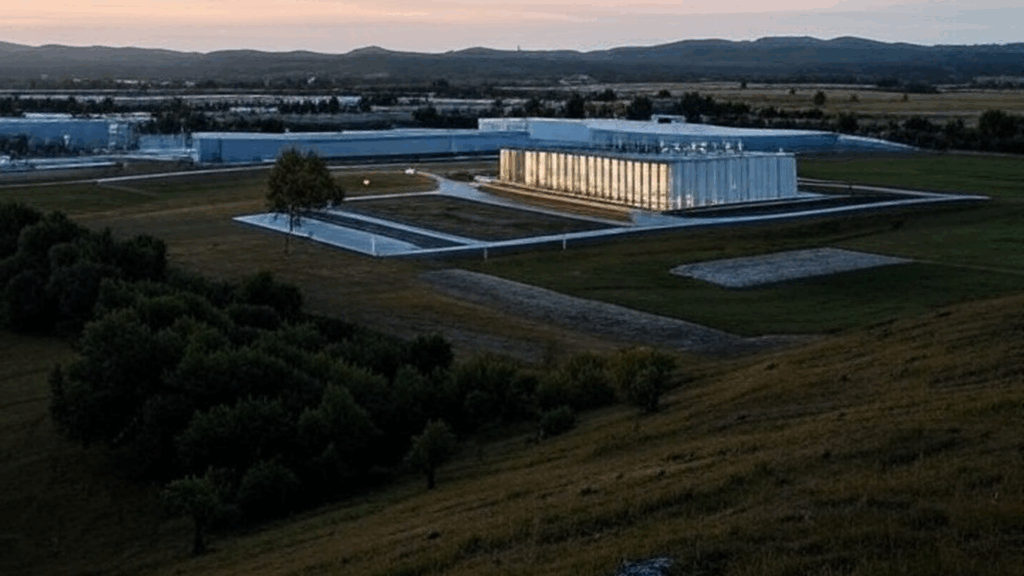

This is Microsoft saying the Rubin era will not require a forklift rebuild for Azure. They have been pre‑wiring sites like Fairwater (Wisconsin, Atlanta) for Rubin‑class thermals, power density, and networking, and they want enterprises to see that as a durable edge.

The key signal is the shift from “buy GPUs” to “co‑design full AI factories.” Rubin is 5x the NVFP4 inference performance per rack versus GB200 NVL72. That kind of jump forces a rethink of power distribution, liquid cooling loops, rack weights, and pod‑level networking. Microsoft is telling us they baked Rubin’s design assumptions into their next‑gen regions years ago. That is a strong moat in a market where most enterprises and smaller clouds are still figuring out how to safely run Hopper.

This also reinforces the consolidation trend around NVIDIA at the very high end. Azure is touting Rubin, GB200, GB300, Quantum‑2 InfiniBand, and tight platform integration. If you are an enterprise wanting state‑of‑the‑art NVIDIA at scale, this message is: do it here, not by yourself. That pulls spend away from on‑prem science projects and weaker clouds who cannot match power, cooling, or fabric design at Rubin densities.

The systems view matters. GPU utilization is often the real bottleneck, not just GPU count. Microsoft is leaning on:

- Offload engines like Azure Boost and HSM silicon to free CPU from IO, networking, and security overhead.

- High‑throughput storage and proximity placement to keep multi‑rack training jobs fed.

- AKS and CycleCloud tuned for massive clusters.

Rubin‑class clusters will be unforgiving of any weak link. If your storage or scheduler cannot keep up, you pay for dark silicon. Azure’s pitch is “we have already found and fixed those weak links at GB200 scale.”

From an energy and facilities perspective, this underlines how AI data centers are becoming single‑purpose superfactories. You do not casually retrofit a general‑purpose cloud region for Rubin racks. You plan power feeds, substations, liquid cooling, and pod layouts years ahead. That favors hyperscalers with capital, permitting muscle, and deep NVIDIA partnerships. It also raises the bar for sovereign AI players who want Rubin on their own soil. They now compete not only for GPUs, but for the capability to host Rubin thermal profiles safely and reliably.

For enterprises, the operational read is clear. If you want top‑end NVIDIA, your default near‑term options are:

- Consume it as a managed platform from Azure and similar hyperscalers.

- Partner with a neocloud that can ride on these hyperscalers or co‑locate near them.

Very few will build Rubin‑ready facilities themselves. Even many sovereign and regulated environments will end up using Azure as the “AI superfactory” behind a sovereign compliance wrapper.

The Big Picture:

This announcement sits at the intersection of several macro trends:

AI data center construction surge: Microsoft is validating that “AI superfactories” are now a distinct class of facility. They are designing for Rubin‑class power density and liquid cooling from day one. General cloud regions are no longer enough. This drives larger, more specialized campuses, with heavier grid and water negotiations.

AI hardware arms race: Rubin at 5x rack‑level inference throughput over GB200 NVL72 shows the cadence of NVIDIA’s road map. Microsoft is co‑optimizing entire sites for each generation. That deepens the NVIDIA‑Azure axis and makes it harder for AMD or custom accelerators to displace them at the very high end, unless those vendors also engage in early co‑design.

GPU availability and supply chain: When a hyperscaler can prove “we are Rubin‑ready at scale,” it becomes a priority landing zone for NVIDIA allocation. That further constrains supply for smaller clouds and on‑prem buyers. Rubin‑class shipments will likely go first to partners with proven power, cooling, and networking readiness. Azure is raising its hand as launch infrastructure.

Neocloud vs public cloud and cloud repatriation: This story cuts against broad repatriation for frontier training and massive inference. Enterprises may still repatriate steady‑state workloads or smaller AI tasks, but Rubin‑scale work is being architected for hyperscaler AI factories. The neoclouds that win in this space will be the ones that build on or near Azure‑class facilities, not generic colos.

Sovereign AI: Countries and large regions that want sovereign AI with Rubin‑class performance will need either tight partnerships with Microsoft / similar hyperscalers or must fund comparable AI factories themselves. The bar is rising beyond “have GPUs in a local DC” to “run Rubin safely at full performance envelope.” That is a much bigger facilities and policy lift.

Energy and water constraints: Rubin racks at exascale‑class NVFP4 numbers per rack are not free from a utility perspective. Even if Microsoft does not publish power figures here, the direction is obvious. Future AI campuses will face more pushback from communities and regulators over grid impact and water use for liquid cooling. Azure’s focus on custom heat exchangers hints at increasing attention to thermal efficiency, but the macro trend is clear. AI is now a major grid planning input.

Microsoft is locking in its role as one of the few platforms capable of standing up Rubin‑class AI at scale, on time, and with high utilization. That amplifies NVIDIA’s central role, concentrates frontier AI in a handful of AI superfactories, and raises the infrastructure bar for everyone else.

Signal Strength: High