CoreWeave was named the first NVIDIA Exemplar Cloud for training on the new GB200 NVL72 systems, after exceeding NVIDIA’s own reference performance targets on standard CoreWeave clusters. The validation focused on Model Flops Utilization and stability at scale, using 8 racks of GB200 NVL72 connected via Quantum‑2 InfiniBand and managed by CoreWeave’s Mission Control stack.

My Analysis:

This is NVIDIA putting a public “gold star” on CoreWeave’s GB200 environment for large scale training. That matters because GB200 capacity will be scarce, expensive, and politically allocated. Being first in line as an Exemplar Cloud effectively makes CoreWeave a reference design for how to run GB200 at scale: tightly integrated racks, full‑stack observability, automated straggler detection, and high MFU.

The key technical signal is not just “we’re fast”, it is “we can keep MFU high and predictable on production hardware under fault conditions”. They intentionally injected an underperforming component and let Mission Control handle it in real time. That is the operational differentiator that enterprises and model labs actually care about: fewer stuck jobs, fewer silent laggards, and consistent time‑to‑train.

From an infrastructure lens, this pushes three trends:

1. GPU supply chain and specialization

GB200 NVL72 will ship in limited volume and is effectively a rack‑scale appliance. NVIDIA wants partners that can deliver near‑lab performance in the wild. CoreWeave’s designation shows NVIDIA is comfortable routing high value, high‑profile training to a neocloud, not just the big three. That shifts some GPU gravity toward specialized AI clouds that design around dense racks, lossless fabrics, and rack‑aware schedulers.

2. Full‑stack control and “AI operating systems”

Mission Control is basically a production AI fleet OS built from real GPU fleet operations. Predictive failure detection, per‑component quality gating, GPU straggler detection, and automated job restarts are the operational plumbing hyperscalers usually keep in‑house. Exposing this as the “operating standard for AI” is CoreWeave’s way of saying: public clouds give you instances, we give you a tuned cluster that behaves like a single coherent machine.

3. Economics and enterprise implications

For large training runs, a few percentage points of MFU is millions of dollars and weeks of schedule. Enterprises and labs looking at 10‑ to 100‑million GPU‑hour projects are going to compare “effective MFU plus failure behavior” across providers, not list price per GPU‑hour. NVIDIA’s standardized Exemplar benchmarks are a signaling tool so buyers can justify moving training off general purpose clouds to a neocloud that proves higher utilization in the open.

On sovereign AI and locality, this also hints at a playbook. Countries and regulated sectors that want sovereign AI will not build raw colo plus loose GPUs. They will want GB200‑class pods run with this kind of telemetry, straggler handling, and lifecycle control. CoreWeave’s Mission Control approach is very exportable to regional JV builds or sovereign deployments, even if the article does not say that out loud.

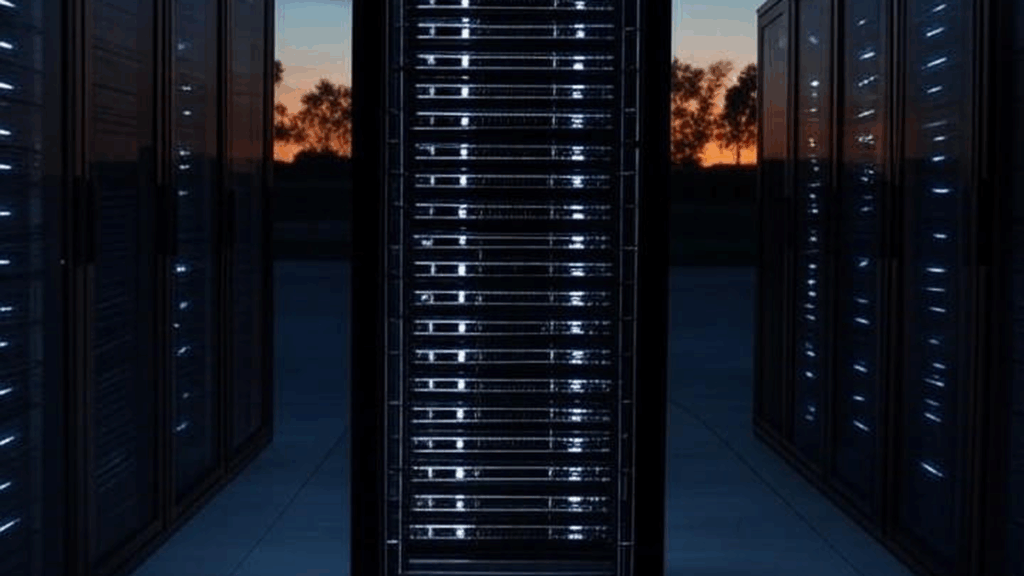

For data center design, GB200 NVL72 plus InfiniBand plus rack‑scale monitoring is a clear pivot away from “spray GPUs across generic cloud AZs” toward “treat a rack as the atomic unit of AI infrastructure.” Cooling and power are not deeply discussed here, but the mention of cabinet‑level monitoring and cooling telemetry tells you these racks are at the physical limits. Anyone who cannot instrument and control at that level will waste both power and GPU time.

Vendor‑wise, this is NVIDIA blessing a neocloud as an exemplar of how to run its most advanced hardware, which is a strategic nudge to both hyperscalers and on‑prem OEMs. If you want top‑tier NVIDIA hardware, you are expected to:

- Adopt their tightly integrated rack designs

- Use high‑end InfiniBand or equivalent

- Instrument everything and tune for MFU, not just raw capacity

CoreWeave is effectively a reference customer and a reference operator in one.

The Big Picture:

This touches several macro trends at once:

AI hardware arms race and GPU supply chain

GB200 is the tip of the spear in NVIDIA’s data center roadmap. Being first Exemplar Cloud signals where early GB200 capacity will actually be usable at scale, which impacts who can train frontier models on schedule. This is a quiet allocation mechanism: NVIDIA endorses operators who can turn its hardest‑to‑run systems into reliable services.

Neocloud vs public cloud

CoreWeave is the archetype neocloud: GPU‑dense, network‑tuned, AI‑only. This certification reinforces that high‑end AI training is splitting from generic cloud. The big clouds will still dominate “everything else” and a lot of inference, but for 10k‑GPU training jobs, buyers will at least benchmark neoclouds with published MFU metrics and Exemplar badges.

Enterprise AI adoption and cloud repatriation

As enterprises move from POCs on H100 to real multi‑month GB200 training, the pain points shift. They care less about “Can I get a GPU?” and more about “Will my 6‑week run silently degrade or fail on day 38?” A provider that can prove superior MFU and automatic fault handling becomes a rational alternative to doing this on generic cloud, or even to standing up your own GB200 pods without comparable software.

AI data center buildout and operations

The article is really about operations, not just hardware. Predictive failure detection, straggler isolation, and rack‑level health visibility are becoming table stakes for AI data centers. Power and water constraints mean you must squeeze efficiency out of every watt and every GPU hour. High MFU plus automated recovery is how you justify dense, power‑hungry GB200 deployments to both CFOs and facility planners.

Vendor ecosystem dynamics

NVIDIA’s Exemplar program is a soft governance tool. It sets performance norms and encourages a specific vertically integrated pattern: NVIDIA HW, NVIDIA networking, tight NVIDIA partner clouds with their own “Mission Control” layer. Competing silicon vendors and generalist clouds now have to respond with either better economics, easier multi‑tenant support, or their own utilization transparency.

Signal Strength: High