Supermicro pushes liquid‑cooled Blackwell B300 racks for dense AI factories

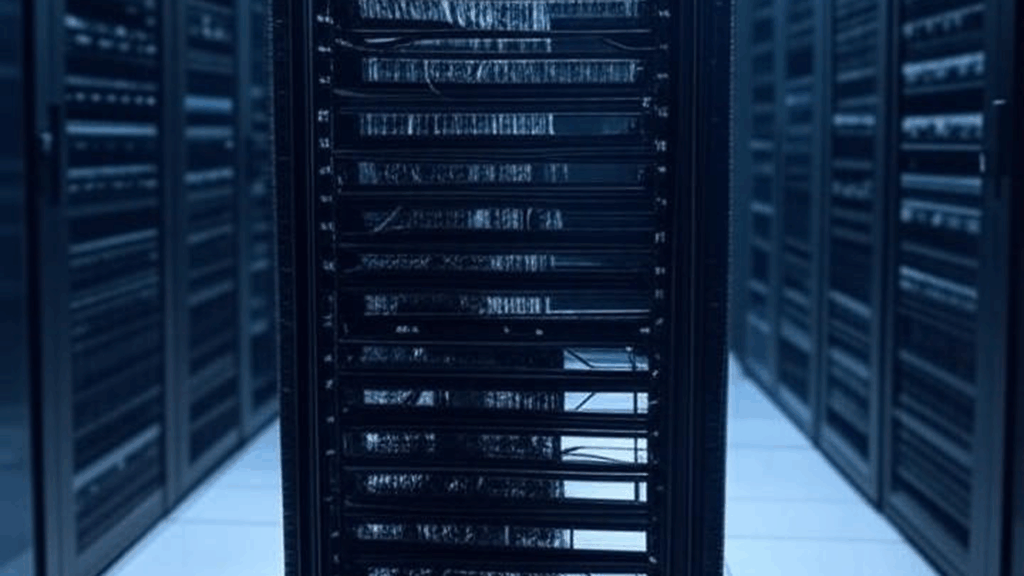

Supermicro launched new liquid‑cooled NVIDIA HGX B300 Blackwell systems in 4U (19‑inch) and 2‑OU OCP (21‑inch) formats, and says they are ready for high‑volume shipment. The designs target hyperscale and “AI factory” builds with up to 64 GPUs per standard rack or 144 GPUs per OCP ORV3 rack, using direct liquid cooling.

My Analysis:

This is Supermicro leaning hard into one of the only ways to keep the Blackwell generation deployable at scale: dense liquid‑cooled racks with integrated power and cooling engineering. Eight 1,100 W GPUs per node at 144 GPUs per rack is a power and thermals problem before it is an AI problem, and Supermicro is trying to sell “solved racks” rather than just servers.

The more important signal is packaging:

- 21‑inch OCP ORV3 with blind‑mate manifolds and modular trays for hyperscalers and cloud providers.

- 19‑inch 4U front‑I/O for everyone else building AI factories in legacy racks.

This splits the market into two tracks: cloud‑scale ORV3 builds and enterprise / sovereign builds that still live in 19‑inch EIA sites.

Supermicro is also pushing its Data Center Building Block and DLC‑2 story as a TCO lever:

Capture “up to 98 percent” of system heat via liquid cooling.

Run 45°C warm water, cut power consumption (they claim up to 40 percent) and eliminate chilled water and compressors in some designs.

That aligns with what facilities teams actually care about: fewer chillers, simpler mechanical plants, and better PUE, not just “AI performance.”

The rack‑scale design is the key:

1.8 MW in‑row coolant distribution units to support CDUs at the aisle level.

Eight compute racks + networking racks + CDUs as a repeatable “SuperCluster” unit with 1,152 GPUs.

That is the beginning of a reference blueprint for AI factory pods that you can drop into a data hall if you have the power and water.

Networking matters here too. By baking in NVIDIA ConnectX‑8 SuperNICs and 800 Gb/s fabrics tied to NVIDIA Quantum‑X800 or Spectrum‑4, they are making these racks part of the NVIDIA vertically integrated universe. That is good for performance and time‑to‑deploy, but it also tightens the NVIDIA lock‑in loop at the fabric level, not just at the GPU level.

For sovereign AI and neoclouds, this is attractive. Smaller national clouds, telcos, and specialist AI providers can order L11/L12 validated racks instead of doing their own painful integration of Blackwell, 800G fabric, and liquid cooling. It shortens the path from “we got a GPU allocation” to “we have an operational AI pod.”

The flip side: a 1.8 MW liquid‑cooled pod with warm water loops is not a fit for every brownfield data center. Power density, floor loading, water availability, and permitting are now gating factors. This pushes more customers toward greenfield AI campuses and retrofit programs, not incremental add‑ons.

My read: Supermicro is positioning itself as the “AI rack OEM” for Blackwell generation builds, especially for customers who want NVIDIA performance without building their own OCP and cooling engineering stack. That is a smart move in a world where GPU supply is constrained and integration talent is even more constrained.

The Big Picture:

This ties directly into several macro trends:

AI data center construction surge:

These systems assume multi‑MW pods with liquid loops and in‑row CDUs. That lines up with the current boom in AI‑specific campuses instead of generic colos. Supermicro is shipping not just boxes, but an implied reference architecture for AI factory halls.

Energy and water constraints:

The shift to warm‑water direct liquid cooling is a response to real utility and permitting pressure. “No chillers, warm water, less power for cooling” is something regulators and host communities want to hear. For operators, it is about cramming more GPUs into the same MW envelope.

GPU availability and supply chain:

By broadening its Blackwell portfolio and being “ready for high‑volume shipment,” Supermicro is signaling that it intends to be one of the main channels for scarce NVIDIA B300 capacity. If you can secure Blackwell, you also need someone who can actually ship the racks, manifolds, and CDUs on time. That strengthens Supermicro’s role in the NVIDIA supply chain and limits options for buyers that want non‑NVIDIA fabrics or more open platforms.

Neocloud vs public cloud and sovereign AI:

These rack‑scale, pre‑validated solutions are exactly what neoclouds and sovereign operators need. They let you stand up an NVIDIA‑aligned AI region outside the big three clouds without recreating hyperscaler engineering. Expect national clouds, regulated industries, and defense / federal environments to look at these Blackwell racks as building blocks for sovereign AI clusters.

Vendor ecosystem dynamics:

Supermicro is doubling down on deep NVIDIA integration: GPUs, networking, software stack (NVIDIA AI Enterprise, Run:ai) all certified together. That makes Supermicro stickier as an NVIDIA system partner, but also narrows its differentiation versus other NVIDIA‑first OEMs to “we ship faster and pack denser with better liquid cooling.” For enterprises, it simplifies procurement at the cost of optionality around non‑NVIDIA accelerators and fabrics.

Enterprise AI adoption and cloud repatriation:

L11/L12 “ready racks” reduce the integration tax for enterprises and government agencies that want to bring AI training in‑house, either for cost, data residency, or security reasons. If you are evaluating repatriation of large AI workloads from public cloud, this type of rack is exactly what your infra and facilities teams will price against your current cloud GPU bill.

Overall, this is not a headline about a new chip. It is a signal that the ecosystem is now focused on rack‑scale, liquid‑cooled, multi‑MW AI factory units as the default deployment target for Blackwell.

Signal Strength: High