Traefik Labs joined the HPE Unleash AI Partner Program and integrated its Triple Gate security architecture with HPE Private Cloud AI, co‑developed with NVIDIA. The combined stack lets customers run GPU‑accelerated AI workloads, safety filtering, agent governance, and API security entirely on HPE infrastructure with zero external dependencies, including air‑gapped environments.

My Analysis:

This is a clear signal that sovereign AI is moving from slideware to concrete reference architectures. HPE is packaging a full “keep everything on your metal” AI runtime: NVIDIA GPUs, NIM microservices, and Traefik’s three‑layer gateway stack for safety, agent access, and backend API control.

The key is not just security. It is architectural independence from hyperscaler services. No external calls for prompt safety, policy, or routing. That matters for intelligence, healthcare, and financial services where a single outbound dependency can break compliance or national policy. For GPU utilization, this is important. If you are going to justify a private GPU cluster or HPE Private Cloud AI install, you must run the full inference and control plane on‑prem, not bounce to cloud for safety or orchestration.

Triple Gate maps cleanly to how real enterprises segment risk:

- AI Gateway runs Nemotron / Safety NIMs on the same NVIDIA GPUs to sanitize prompts before model access.

- MCP Gateway wraps AI agents with task‑level controls over systems like Salesforce or Oracle, which is how regulated teams think: by task and transaction, not vague “RBAC for agents.”

- API Gateway applies the same enterprise patterns we already know: TLS, mTLS, rate limiting, DLP, secrets.

This is how you make AI look like a “normal” Tier 1 application in a data center, instead of a science project. For HPE, it strengthens their pitch as the hardware plus “sovereign AI control plane” provider. For Traefik, it is a strong move from app ingress into AI runtime security, tied directly to GPU‑accelerated stacks and NVIDIA NIMs.

The offline and air‑gapped emphasis matters for facilities strategy too. If you can keep all AI logic and safety local, you can place AI clusters in secure sites that are heavily firewalled or disconnected, for defense and critical infrastructure. You still have to solve power, cooling, and water to feed GPUs, but you are no longer blocked by network policy or cross‑border data movement.

For enterprises already deep on HPE and GreenLake, this lowers friction to build “mini‑neoclouds” in their own racks. Governance in Git and portable policies across cloud, on‑prem, and HPE Private Cloud AI is a direct response to multi‑environment sprawl. It also gives some cover for cloud repatriation: you can move sensitive AI workloads back in‑house without rebuilding the safety and routing stack from scratch.

The Big Picture:

This ties into several macro trends at once:

Sovereign AI: This is textbook sovereign AI design. No external dependencies, air‑gap capable, safety and policy running on your own GPUs, and explicit targeting of intelligence and regulated industries. It aligns with governments and large enterprises that want AI while keeping data and control within their own legal and physical borders.

Neocloud vs public cloud: HPE Private Cloud AI plus Traefik’s sovereign runtime is a neocloud pattern. You get cloud‑style AI services, but anchored to your racks, your facilities, and your compliance posture. This is where a lot of serious spend in Europe and government is heading instead of pure public cloud.

GPU availability and supply chain: NVIDIA is embedded here via NIM microservices and NVIDIA Accelerated Computing. This continues the pattern: sovereign AI still depends on NVIDIA’s GPU pipeline and software stack. HPE’s role is to turn that into a consistent delivery and support channel for enterprises that do not want to assemble parts themselves.

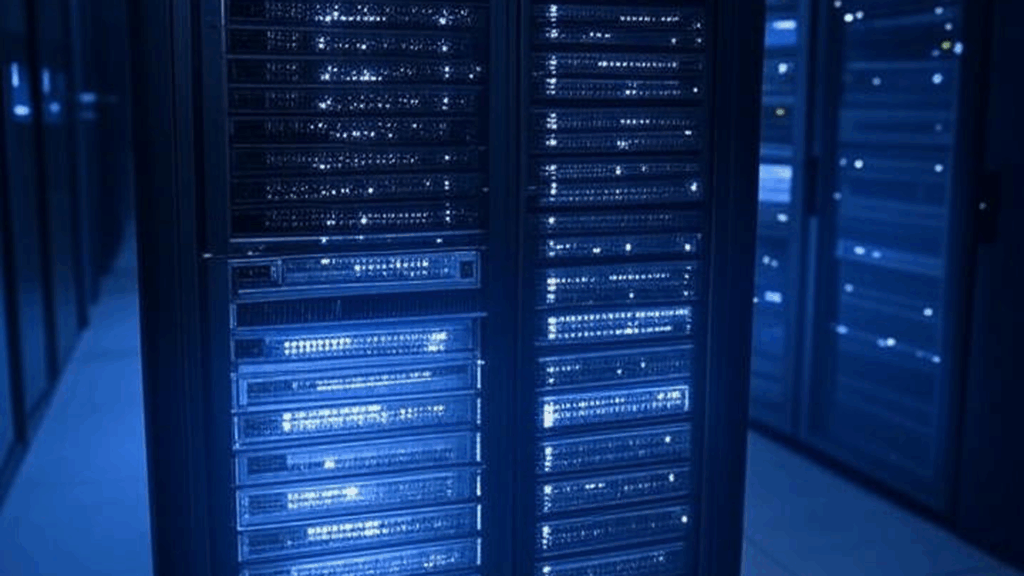

AI data center buildout: Offerings like this help justify new on‑prem AI pods or expansions of existing HPE‑based data centers. If you can check the boxes on sovereignty, safety, and governance out of the box, it becomes easier to win internal approvals for dense GPU installations in regulated sectors.

Vendor ecosystem dynamics: This is a tight three‑way alignment between HPE, NVIDIA, and a software control‑plane player. Hyperscalers typically provide their own gateways and safety services. Here, Traefik is carving out the “AI ingress and governance” layer for sovereign and hybrid deployments. It is another data point that the AI stack is solidifying into: GPU vendor + system integrator / OEM + security / runtime vendor.

Enterprise AI adoption: The architecture is designed to meet how enterprises already operate. Git‑based policy, API‑centric security, and explicit support for systems like Salesforce and Oracle through agent controls. This shortens the distance from pilot models in a lab to production workloads on regulated data.

Cloud repatriation: For organizations that started AI in public cloud to move fast, but now face regulatory pressure, this kind of stack is a landing zone. You can keep similar capabilities and controls while pulling the runtime back on HPE hardware under your own roof.

Signal Strength: High